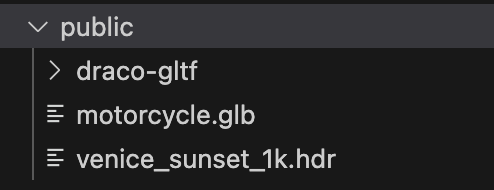

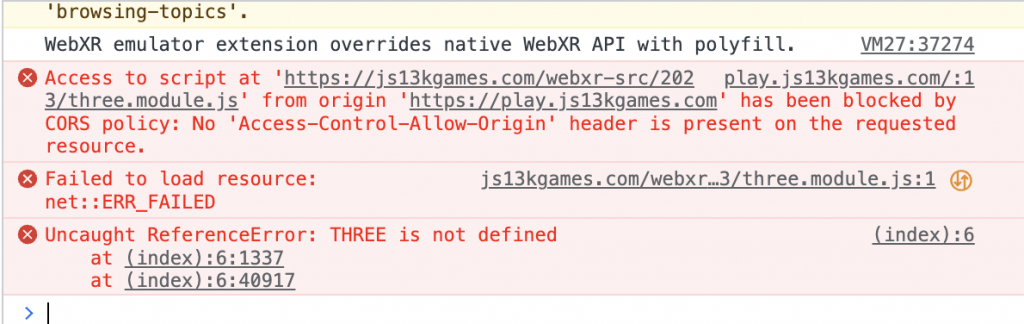

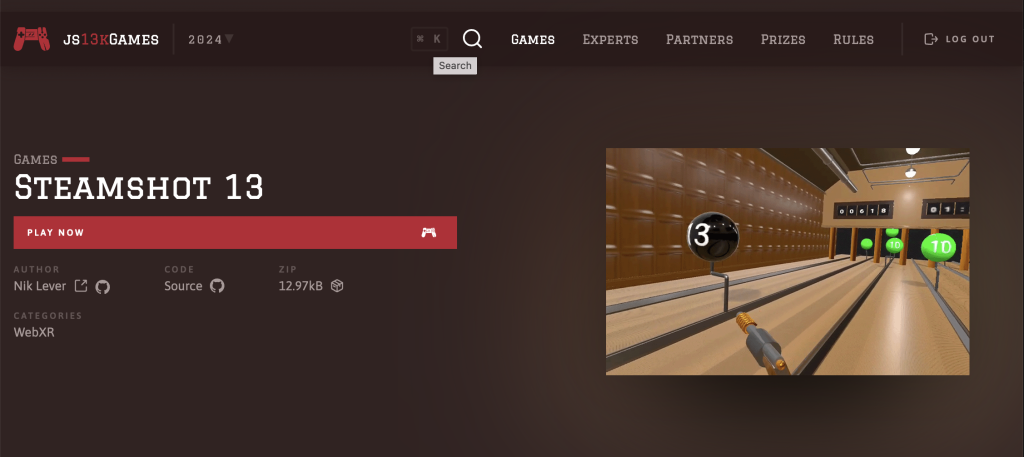

I entered this years js13kgames competition. If you don’t know you have to create a game which when zipped has a file size no bigger than 13kb!! You can’t download online assets like images, sounds, libraries or fonts. Like last year I targeted the WebXR category. This allows an exception to the no libraries rule and allows the developer to use an external library: A-Frame, Babylon.js or Three.js. I chose Three.js.

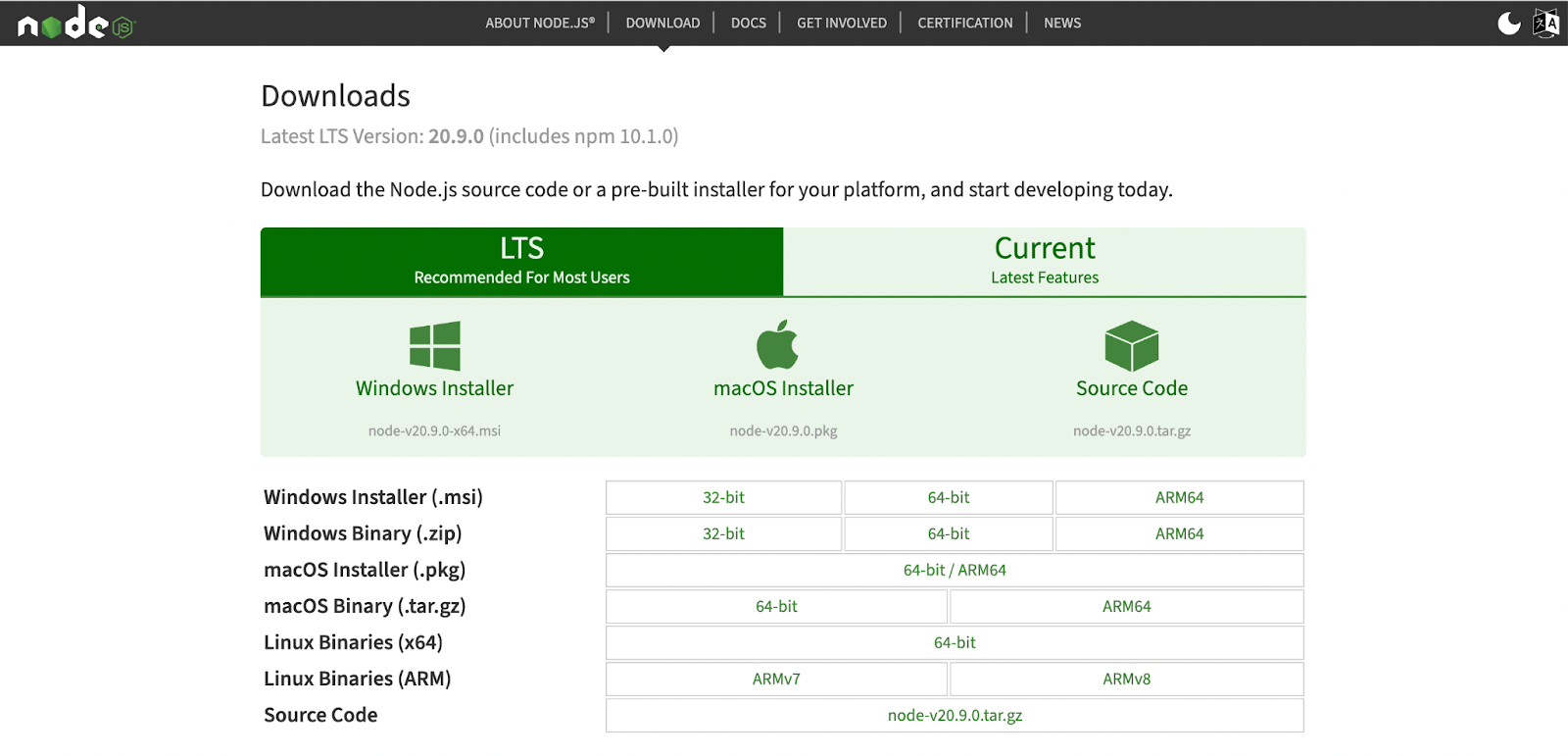

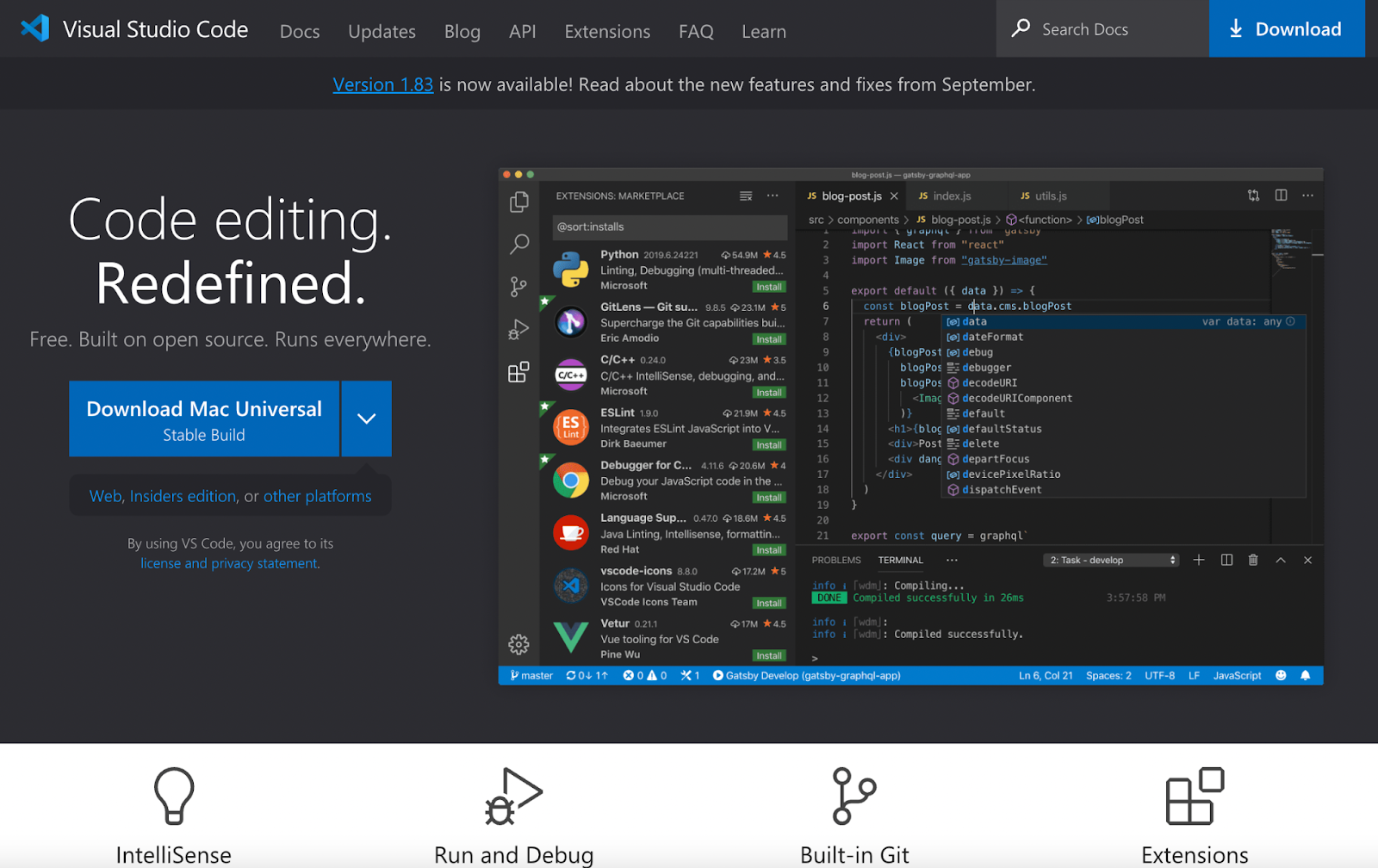

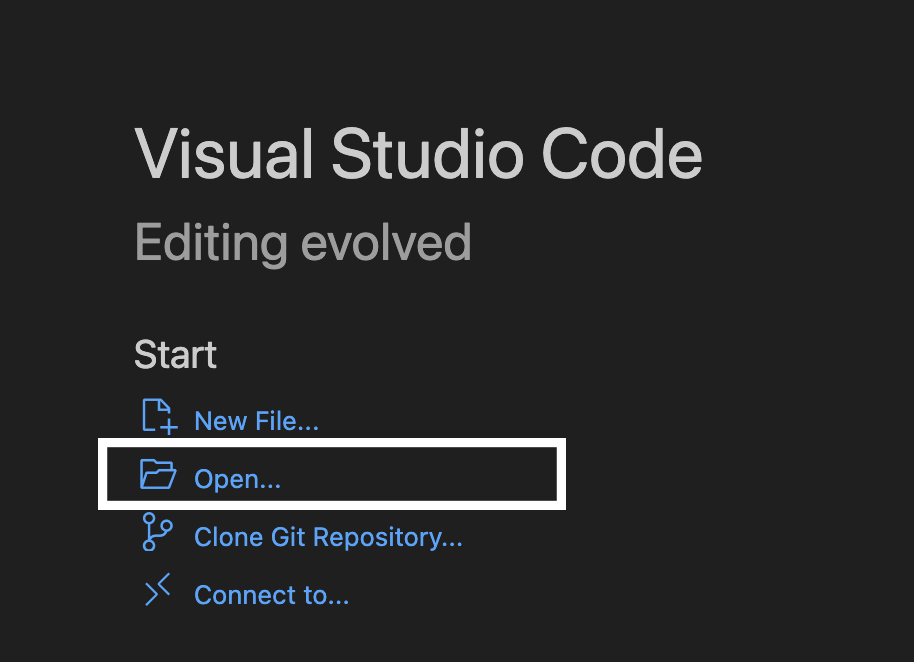

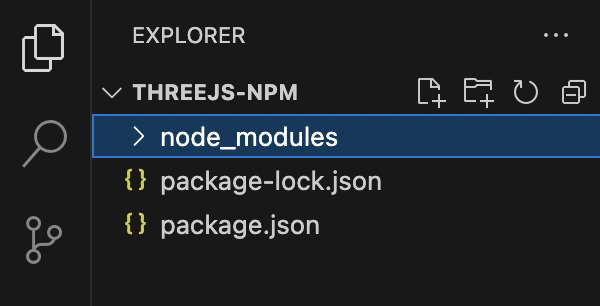

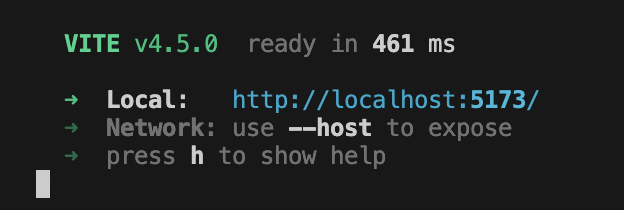

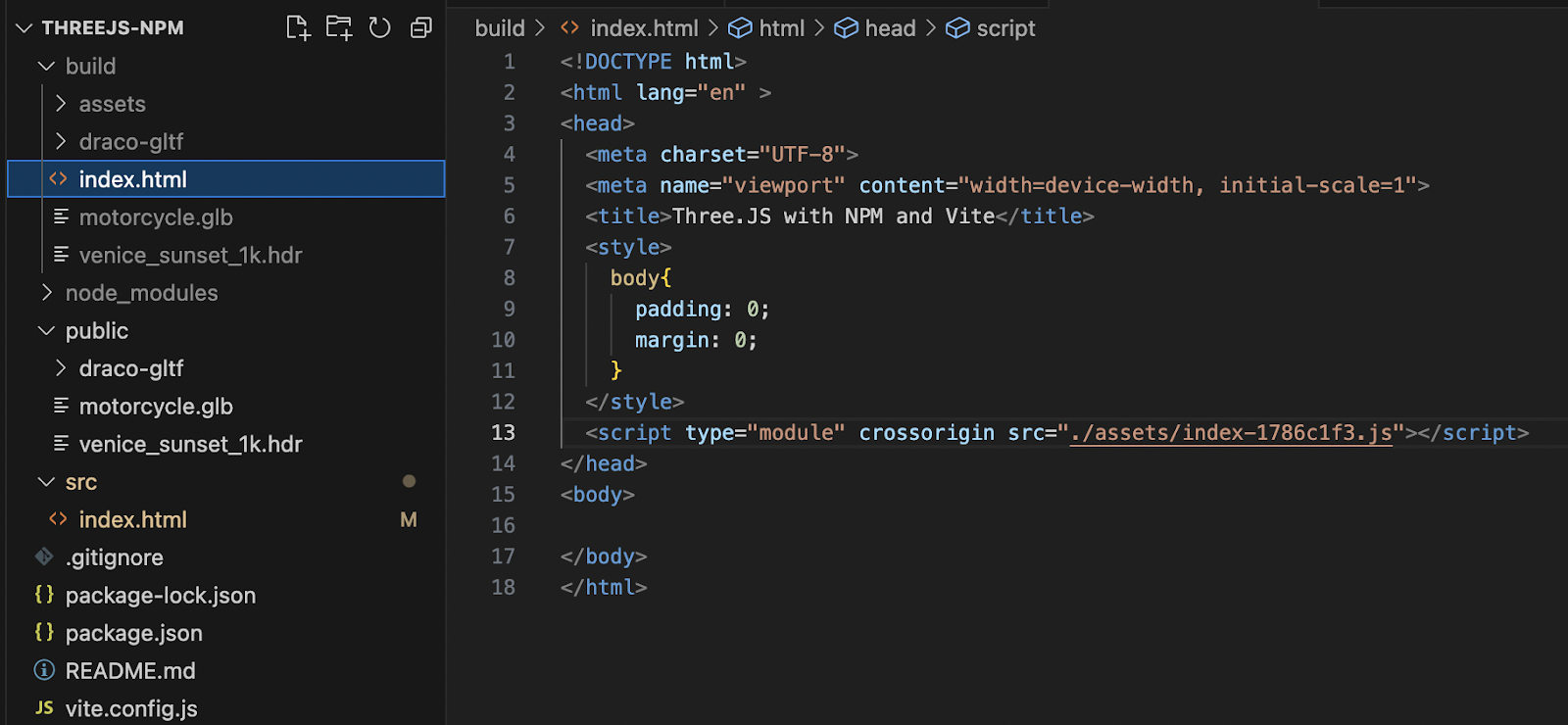

You need to setup an npm project. Here’s my template. To use it, download a zip. Unzip, open the folder using VSCode. Then use:

npm install.

npm run start to start a test server. and

npm run build to create a distribution version in the dist folder and a zipped version in the zipped folder. It will also give a file size report.

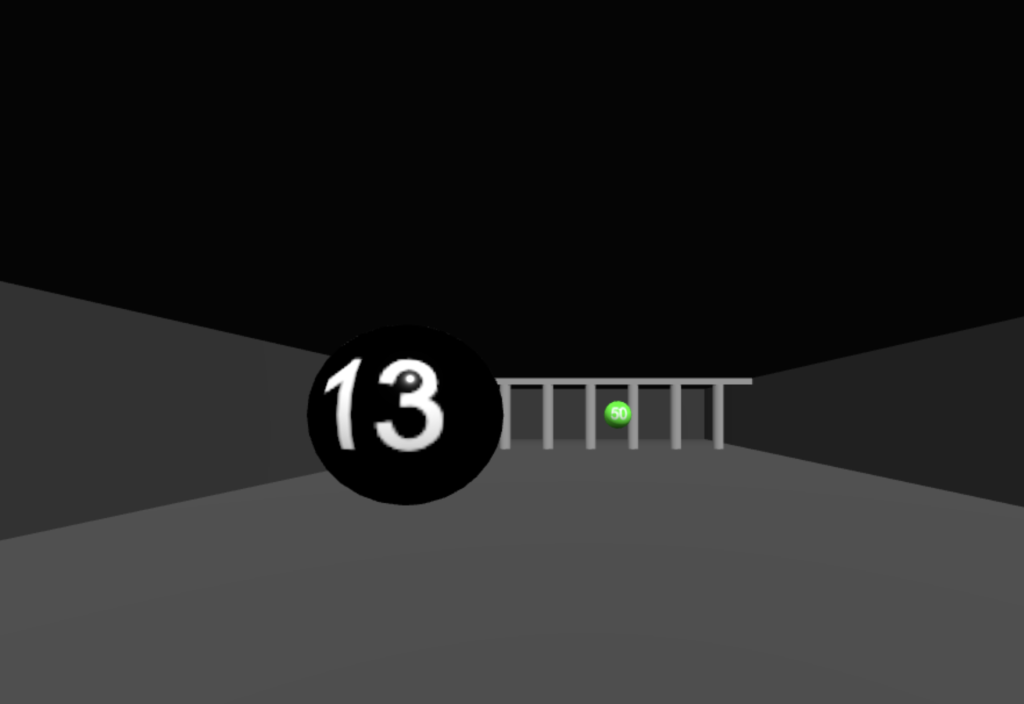

The first challenge is creating a game that fits the theme for the year. In 2024 the theme was Triskaidekaphobia. That is the fear of the number 13. I decided to create a shooting gallery where the user must shoot any ball with the number 13 on it.

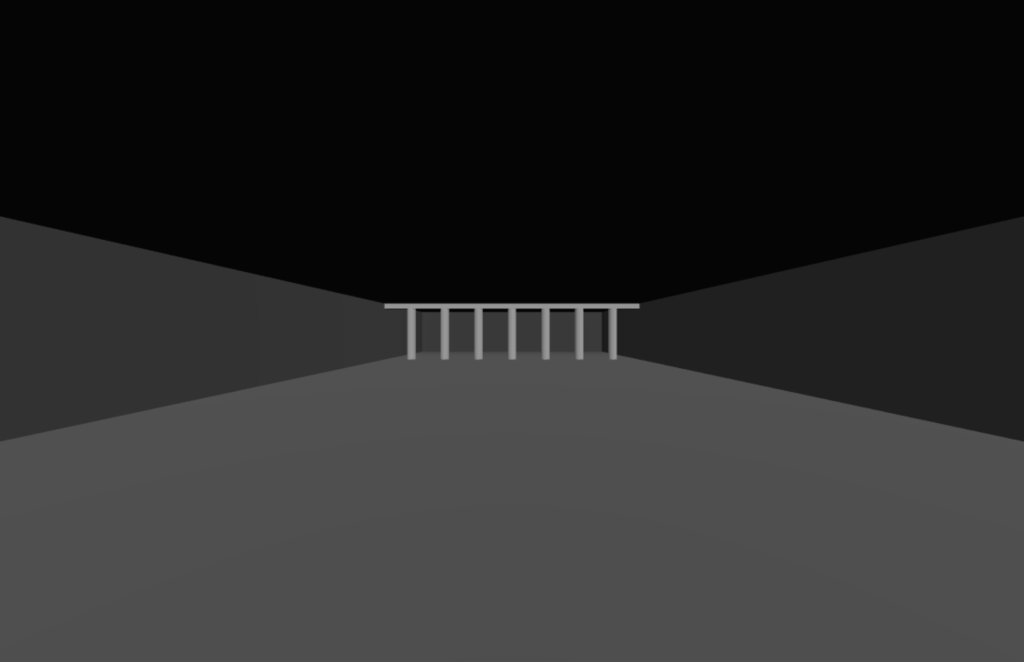

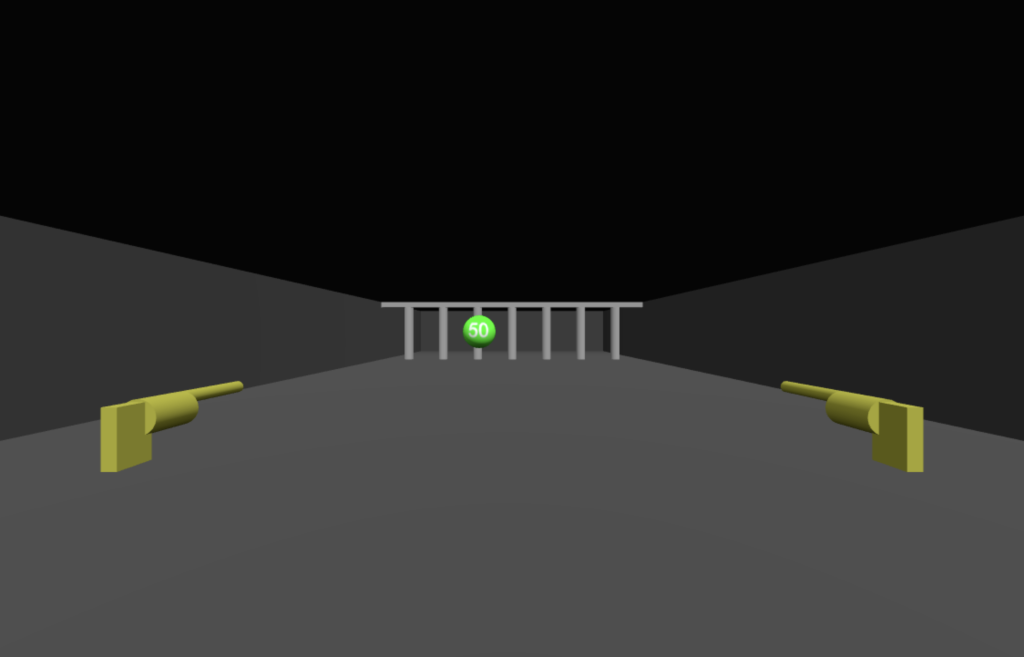

Initially I created a proxy of the environment in code.

export class Proxy{

constructor( scene ){

this.scene = scene;

const geo1 = new THREE.CylinderGeometry( 0.25, 0.25, 3 );

const mat1 = new THREE.MeshStandardMaterial( { color: 0x999999 } );

const mat2 = new THREE.MeshStandardMaterial( { color: 0x444444, side: THREE.BackSide, wireframe: false } );

const column = new THREE.Mesh( geo1, mat1 );

for ( let x = -6; x<=6; x+=2 ){

const columnA = column.clone();

columnA.position.set( x, 1.5, -20);

scene.add( columnA );

}

const geo2 = new THREE.PlaneGeometry( 15, 25 );

geo2.rotateX( -Math.PI/2 );

const floor = new THREE.Mesh( geo2, mat1 );

floor.position.set( 0, 0, -12.5 );

//scene.add( floor );

const geo3 = new THREE.BoxGeometry( 15, 0.6, 0.6 );

const lintel = new THREE.Mesh( geo3, mat1 );

lintel.position.set( 0, 3.3, -20 );

scene.add( lintel );

const geo4 = new THREE.BoxGeometry( 15, 3.3, 36 );

const room = new THREE.Mesh( geo4, mat2 );

room.position.set( 0, 1.65, -10 );

scene.add( room );

}

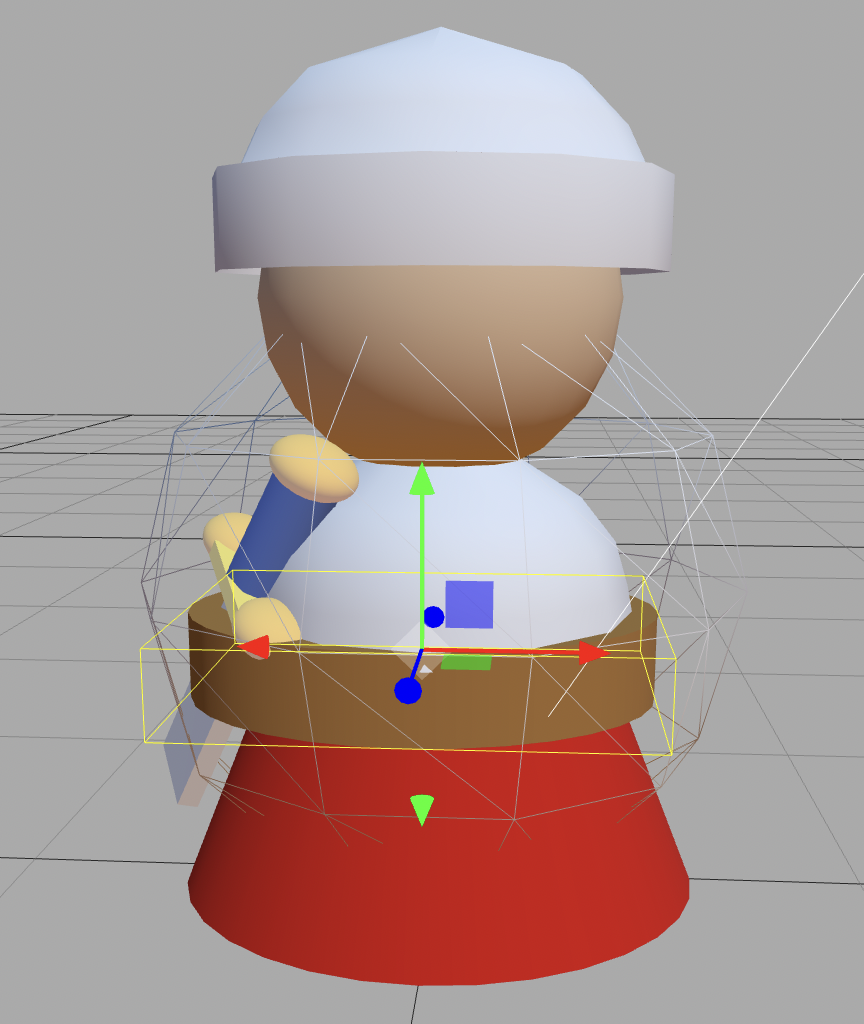

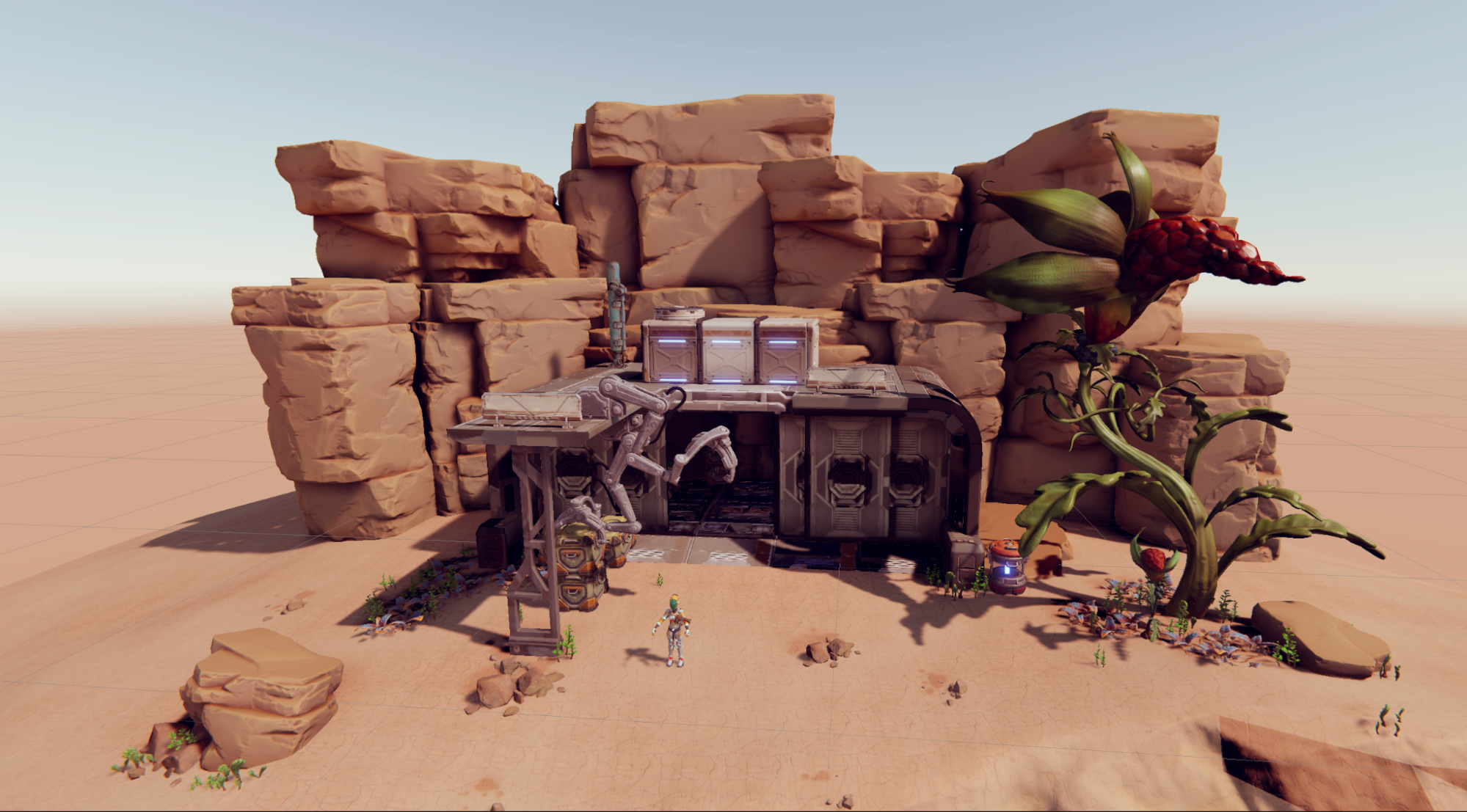

}I was aiming for a look like this –

A bit of a tall-order but you have to have a goal!!

The next step was creating the balls that move toward the player.

Just a simple class.

export class Ball{

static states = { DROPPING: 1, ROTATE: 2, FIRED: 3 };

static canvas = document.createElement('canvas');

static geometry = new THREE.SphereGeometry( 0.5 );

constructor( scene, num, minus = false, xPos = -1, speed = 0.1 ){

if (Ball.canvas.width != 256 ){

Ball.canvas.width = 256;

Ball.canvas.height = 128;

}

const context = Ball.canvas.getContext('2d');

if (num == 13){

context.fillStyle = "#000";

}else if (minus){

context.fillStyle = "#f00";

}else{

context.fillStyle = "#0f0";

}

this.num = num;

this.speed = speed;

context.fillRect(0, 0, 256, 128);

context.fillStyle = "#fff";

context.font = "48px Arial";

context.textAlign = "center";

context.textBaseline = "middle";

context.fillText(String(num), 128, 64 );

const tex = new THREE.CanvasTexture( Ball.canvas );

const material = new THREE.MeshStandardMaterial( { map: tex, roughness: 0.1 } );

this.mesh = new THREE.Mesh( Ball.geometry, material );

this.mesh.position.set( xPos, 4, -20 );

this.mesh.rotateY( Math.PI/2 );

this.state = Ball.states.DROPPING;

scene.add( this.mesh )

}

update(game){

switch(this.state){

case Ball.states.DROPPING:

this.mesh.position.y -= 0.1;

if (this.mesh.position.y <= 1.6){

this.state = Ball.states.ROTATE;

this.mesh.position.y = 1.6;

}

break;

case Ball.states.ROTATE:

this.mesh.rotateY( -0.1 );

console.log( this.mesh.rotation.y );

if (this.mesh.rotation.y < -Math.PI/2.1){

this.state = Ball.states.FIRED;

}

break;

case Ball.states.FIRED:

this.mesh.position.z += this.speed;

break;

}

if (this.mesh.position.z > 2){

this.mesh.material.map.dispose();

if (game) game.removeBall( this );

}

}

}I created a proxy gun.

export class Gun extends THREE.Group{

constructor(){

super();

this.createProxy();

}

createProxy(){

const mat = new THREE.MeshStandardMaterial( { color: 0xAAAA22 } );

const geo1 = new THREE.CylinderGeometry( 0.01, 0.01, 0.15, 20 );

const barrel = new THREE.Mesh( geo1, mat );

barrel.rotation.x = -Math.PI/2;

barrel.position.z = -0.1;

const geo2 = new THREE.CylinderGeometry( 0.025, 0.025, 0.06, 20 );

const body = new THREE.Mesh( geo2, mat );

body.rotation.x = -Math.PI/2;

body.position.set( 0, -0.015, -0.042 );

const geo3 = new THREE.BoxGeometry( 0.02, 0.08, 0.04 );

const handle = new THREE.Mesh( geo3, mat );

handle.position.set( 0, -0.034, 0);

this.add( barrel );

this.add( body );

this.add( handle );

}

}and a Bullet

import { Ball } from "./ball.js";

export class Bullet{

constructor( game, controller ){

const geo1 = new THREE.CylinderGeometry( 0.008, 0.008, 0.07, 16 );

geo1.rotateX( -Math.PI/2 );

const material = new THREE.MeshBasicMaterial( { color: 0xFFAA00 });

const mesh = new THREE.Mesh( geo1, material );

const v = new THREE.Vector3();

const q = new THREE.Quaternion();

mesh.position.copy( controller.getWorldPosition( v ) );

mesh.quaternion.copy( controller.getWorldQuaternion( q ) );

game.scene.add( mesh );

this.tmpVec = new THREE.Vector3();

this.tmpVec2 = new THREE.Vector3();

this.mesh = mesh;

this.game = game;

}

update( dt ){

let dist = dt * 2;

let count = 0;

while(count<1000){

count++;

if (dist > 0.5){

dist -= 0.5;

this.mesh.translateZ( -0.5 );

}else{

this.mesh.translateZ( -dist );

dist = 0;

}

this.mesh.getWorldPosition( this.tmpVec );

let hit = false;

this.game.balls.forEach( ball => {

if (!hit){

if (ball.state == Ball.states.FIRED ){

ball.mesh.getWorldPosition( this.tmpVec2 );

const offset = this.tmpVec.distanceTo( this.tmpVec2 );

if ( offset < 0.5 ){

hit = true;

ball.hit(this.game );

this.game.removeBullet( this );

}

}

}

});

if (dist==0 || hit) break;

}

this.mesh.translateZ( dt * -2 );

if ( this.mesh.position.length() > 20 ) this.game.removeBullet();

}

}Now I had a working basic game. Time to create the eye-candy.

First a score and timer display. WebXR does not allow the developer to use the DOM. You can’t simply create a div, position it and update its content using JavaScript. I decided to create a mechanical counter mechanism.

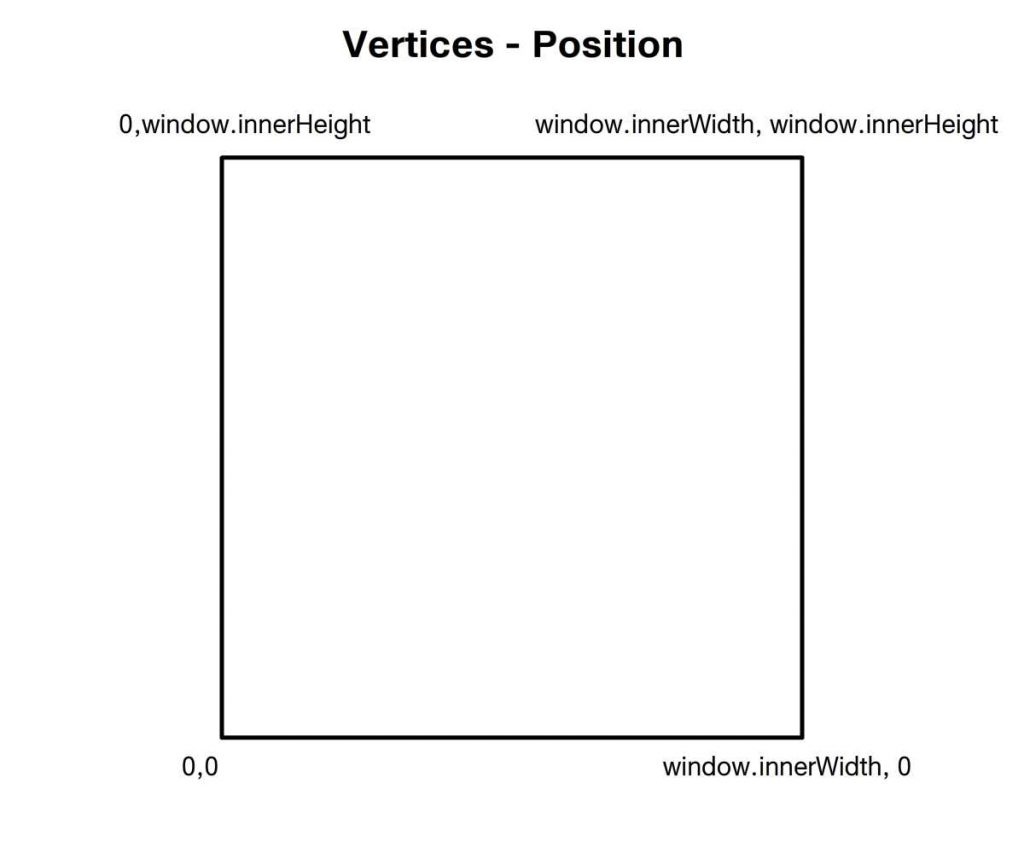

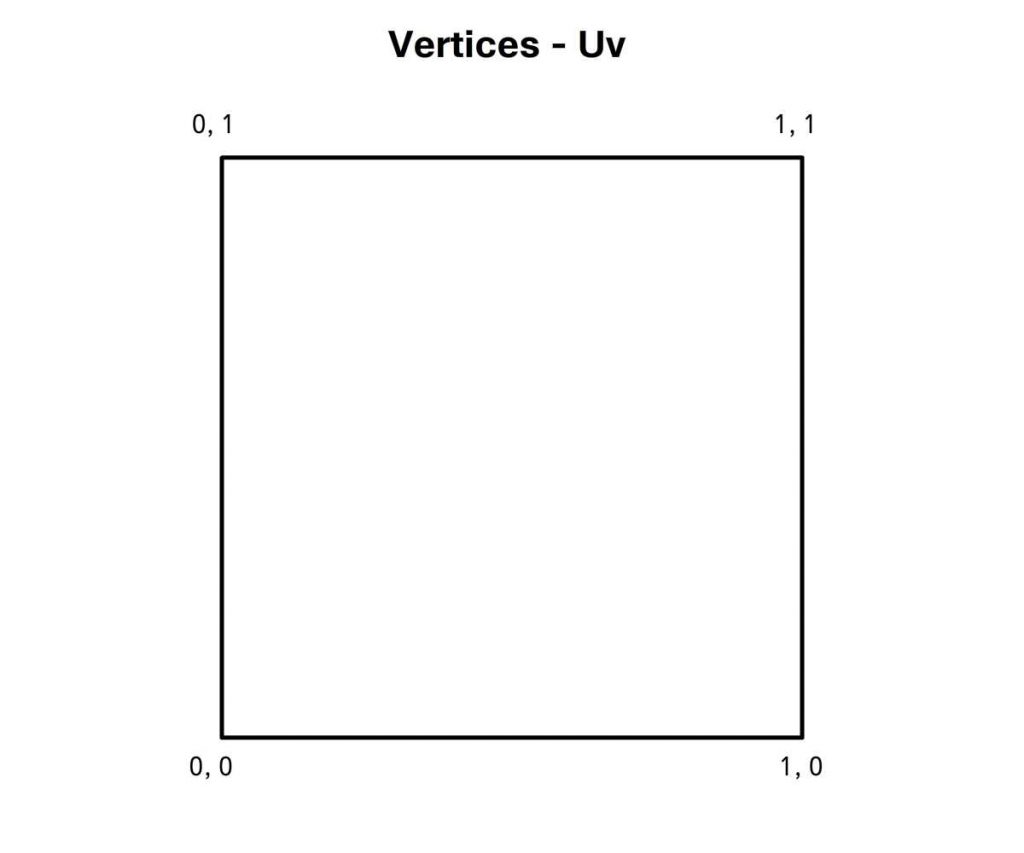

Each segment uses CylinderGeometry. To create the map with the numbers on I used the CanvasTexture class, this creates a Texture from an HTML Canvas, so you can use HTML Canvas drawing commands to ‘paint’ the texture.

export class Counter extends THREE.Group{

static texture;

static types = { SCORE: 0, TIMER: 1 };

constructor( scene, pos = new THREE.Vector3(), rot = new THREE.Euler ){

super();

this.scale.set( 1.5, 1.5, 1.5 );

scene.add( this );

this.position.copy( pos );

this.rotation.copy( rot );

if ( Counter.texture == null ){

const canvas = document.createElement('canvas');

canvas.width = 1024;

canvas.height = 64;

const context = canvas.getContext( '2d' );

context.fillStyle = "#000";

context.fillRect( 0, 0, 1024, 64 );

context.textAlign = "center";

context.textBaseline = "middle";

context.font = "48px Arial";

context.fillStyle = "#fff";

const inc = 1024/12;

const chars = [ "0", "1", "2", "3", "4", "5", "6", "7", "8", "9", ":", " " ];

let x = inc/2;

chars.forEach( char => {

context.setTransform(1, 0, 0, 1, 0, 0);

context.rotate( -Math.PI/2 );

context.translate( 0, x );

context.fillText( char, -32, 34 );

x += inc;

});

Counter.texture = new THREE.CanvasTexture( canvas );

Counter.texture.needsUpdate = true;

}

const r = 1;

const h = Math.PI * 2 * r;

const w = h/12;

const geometry = new THREE.CylinderGeometry( r, r, w );

geometry.rotateZ( -Math.PI/2 );

const material = new THREE.MeshStandardMaterial( { map: Counter.texture } );

const inc = w * 1.1;

const xPos = -inc * 2.5;

const zPos = -r * 0.8;

for( let i=0; i<5; i++ ){

const mesh = new THREE.Mesh( geometry, material );

mesh.position.set( xPos + inc*i, 0, zPos );

this.add( mesh );

}

this.type = Counter.types.SCORE;

this.displayValue = 0;

this.targetValue = 0;

}

set score(value){

if ( this.type != Counter.types.SCORE ) this.type = Counter.type.SCORE;

this.targetValue = value;

}

updateScore( ){

const inc = Math.PI/6;

let str = String( this.displayValue );

while ( str.length < 5 ) str = "0" + str;

const arr = str.split( "" );

this.children.forEach( child => {

const num = Number(arr.shift());

if (!isNaN(num)){

child.rotation.x = -inc*num - 0.4;

}

});

}

updateTime( ){

const inc = Math.PI/6;

let secs = this.displayValue;

let mins = Math.floor( secs/60 );

secs -= mins*60;

let secsStr = String( secs );

while( secsStr.length < 2 ) secsStr = "0" + secsStr;

let minsStr = String( mins );

while( minsStr.length < 2 ) minsStr = "0" + minsStr;

let timeStr = minsStr + ":" + secsStr;

let arr = timeStr.split( "" );

this.children.forEach( child => {

const num = Number(arr.shift());

if (isNaN(num)){

child.rotation.x = -inc*10 - 0.4;

}else{

child.rotation.x = -inc*num - 0.4;

}

});

}

set seconds(value){

if ( this.type != Counter.types.TIMER ) this.type = Counter.types.TIMER;

this.targetValue = value;

this.update( 0 );

}

get time(){

let secs = this.targetValue;

let mins = Math.floor( secs/60 );

secs -= mins*60;

let secsStr = String( secs );

while( secsStr.length < 2 ) secsStr = "0" + secsStr;

let minsStr = String( mins );

while( minsStr.length < 2 ) minsStr = "0" + minsStr;

return minsStr + ":" + secsStr;

}

update( dt ){

if ( this.targetValue != this.displayValue ){

if ( this.targetValue > this.displayValue ){

this.displayValue++;

}else{

this.displayValue--;

}

}

switch( this.type ){

case Counter.types.SCORE:

this.updateScore();

break;

case Counter.types.TIMER:

this.updateTime();

break

}

}

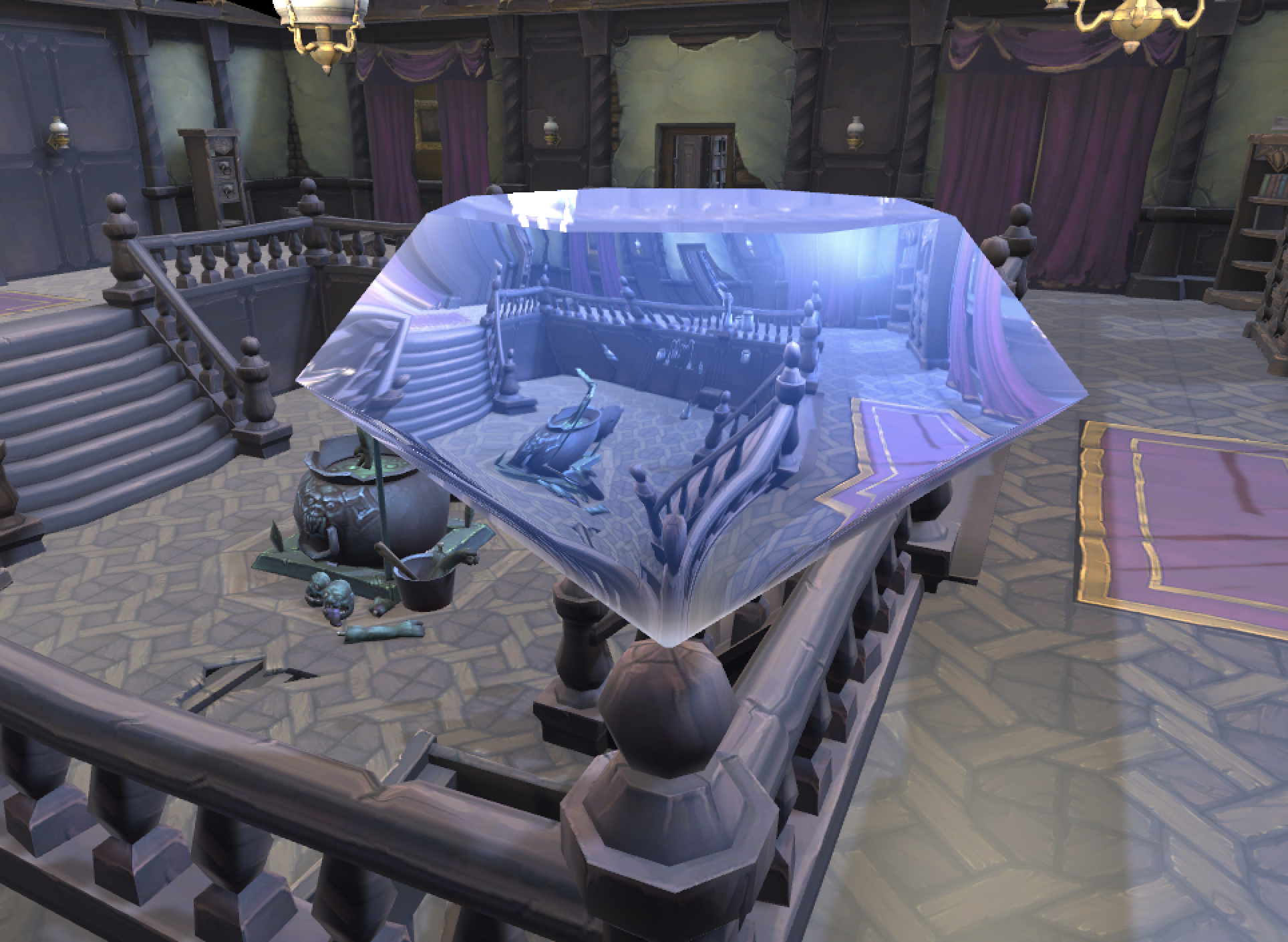

}Another challenge was creating an environment map. Which is essential when using MeshStandardMaterial with a roughness less than 1. As soon as it is smooth it reflects a map which by default is black. Resulting in very dark renders of shiny objects. With only 13k to play with you can’t simply load a bitmap texture. Instead you have to generate an environment map at runtime. A WebGLRenderer does not have to write to the screen. If you set a WenGLRenderTarget you can render to that. For a environment map you need it to be compiled in a special way. You can use a PMREMGenerator and the compileEquirectangularShader method.

if (this.scene.environment == null){

this.renderTarget = new THREE.WebGLRenderTarget(1024, 512);

this.renderer.setSize( 1024, 512 );

this.renderer.setRenderTarget( this.renderTarget );

this.renderer.render( this.scene, this.camera );

const pmremGenerator = new THREE.PMREMGenerator( this.renderer );

pmremGenerator.compileEquirectangularShader();

const envmap = pmremGenerator.fromEquirectangular( this.renderTarget.texture ).texture;

pmremGenerator.dispose();

this.scene.environment = envmap;

this.renderer.setRenderTarget( null );

this.resize();

}I also need a way of rendering wood. I decided to use noise like this CodePen example.

The NoiseMaterial class does the heavy lifting.

export class NoiseMaterial extends THREE.MeshStandardMaterial{

constructor( type, options ){

super( options );

if ( this.noise == null ) this.initNoise();

switch( type ){

case 'oak':

this.wood( 0x261308, 0x110302 );

break;

case 'darkwood':

this.wood( 0x563308, 0x211302 );

break;

case 'wood':

default:

this.wood();

break;

}

this.onBeforeCompile = shader => {

for (const [key, value] of Object.entries(this.userData.uniforms)) {

shader.uniforms[key] = value;

}

shader.vertexShader = shader.vertexShader.replace( '#include <common>', `

varying vec3 vModelPosition;

#include <common>

`);

shader.vertexShader = shader.vertexShader.replace( '#include <begin_vertex>', `

vModelPosition = vec3(position);

#include <begin_vertex>

`);

shader.fragmentShader = shader.fragmentShader.replace(

'#include <common>',

this.userData.colorVars ) ;

shader.fragmentShader = shader.fragmentShader.replace(

'#include <color_fragment>',

this.userData.colorFrag )

}

}

wood( light = 0x735735, dark = 0x3f2d17 ){

this.userData.colorVars = `

uniform vec3 u_LightColor;

uniform vec3 u_DarkColor;

uniform float u_Frequency;

uniform float u_NoiseScale;

uniform float u_RingScale;

uniform float u_Contrast;

varying vec3 vModelPosition;

${this.noise}

#include <common>

`

this.userData.colorFrag = `

float n = snoise( vModelPosition.xxx )/3.0 + 0.5;

float ring = fract( u_Frequency * vModelPosition.x + u_NoiseScale * n );

ring *= u_Contrast * ( 1.0 - ring );

// Adjust ring smoothness and shape, and add some noise

float lerp = pow( ring, u_RingScale ) + n;

diffuseColor.xyz *= mix(u_DarkColor, u_LightColor, lerp);

`

const uniforms = {};

uniforms.u_time = { value: 0.0 };

uniforms.u_resolution = { value: new THREE.Vector2() };

uniforms.u_LightColor = { value: new THREE.Color(light) };

uniforms.u_DarkColor = { value: new THREE.Color(dark) };

uniforms.u_Frequency = { value: 55.0 };

uniforms.u_NoiseScale = { value: 2.0 };

uniforms.u_RingScale = { value: 0.26 };

uniforms.u_Contrast = { value: 10.0 };

this.userData.uniforms = uniforms;

}

initNoise(){

this.noise = `

//

// Description : Array and textureless GLSL 2D/3D/4D simplex

// noise functions.

// Author : Ian McEwan, Ashima Arts.

// Maintainer : stegu

// Lastmod : 20110822 (ijm)

// License : Copyright (C) 2011 Ashima Arts. All rights reserved.

// Distributed under the MIT License. See LICENSE file.

// https://github.com/ashima/webgl-noise

// https://github.com/stegu/webgl-noise

//

vec3 mod289(vec3 x) {

return x - floor(x * (1.0 / 289.0)) * 289.0;

}

vec4 mod289(vec4 x) {

return x - floor(x * (1.0 / 289.0)) * 289.0;

}

vec4 permute(vec4 x) {

return mod289(((x*34.0)+1.0)*x);

}

// Permutation polynomial (ring size 289 = 17*17)

vec3 permute(vec3 x) {

return mod289(((x*34.0)+1.0)*x);

}

float permute(float x){

return x - floor(x * (1.0 / 289.0)) * 289.0;;

}

vec4 taylorInvSqrt(vec4 r){

return 1.79284291400159 - 0.85373472095314 * r;

}

float snoise(vec3 v){

const vec2 C = vec2(1.0/6.0, 1.0/3.0) ;

const vec4 D = vec4(0.0, 0.5, 1.0, 2.0);

// First corner

vec3 i = floor(v + dot(v, C.yyy) );

vec3 x0 = v - i + dot(i, C.xxx) ;

// Other corners

vec3 g = step(x0.yzx, x0.xyz);

vec3 l = 1.0 - g;

vec3 i1 = min( g.xyz, l.zxy );

vec3 i2 = max( g.xyz, l.zxy );

// x0 = x0 - 0.0 + 0.0 * C.xxx;

// x1 = x0 - i1 + 1.0 * C.xxx;

// x2 = x0 - i2 + 2.0 * C.xxx;

// x3 = x0 - 1.0 + 3.0 * C.xxx;

vec3 x1 = x0 - i1 + C.xxx;

vec3 x2 = x0 - i2 + C.yyy; // 2.0*C.x = 1/3 = C.y

vec3 x3 = x0 - D.yyy; // -1.0+3.0*C.x = -0.5 = -D.y

// Permutations

i = mod289(i);

vec4 p = permute( permute( permute(

i.z + vec4(0.0, i1.z, i2.z, 1.0 ))

+ i.y + vec4(0.0, i1.y, i2.y, 1.0 ))

+ i.x + vec4(0.0, i1.x, i2.x, 1.0 ));

// Gradients: 7x7 points over a square, mapped onto an octahedron.

// The ring size 17*17 = 289 is close to a multiple of 49 (49*6 = 294)

float n_ = 0.142857142857; // 1.0/7.0

vec3 ns = n_ * D.wyz - D.xzx;

vec4 j = p - 49.0 * floor(p * ns.z * ns.z); // mod(p,7*7)

vec4 x_ = floor(j * ns.z);

vec4 y_ = floor(j - 7.0 * x_ ); // mod(j,N)

vec4 x = x_ *ns.x + ns.yyyy;

vec4 y = y_ *ns.x + ns.yyyy;

vec4 h = 1.0 - abs(x) - abs(y);

vec4 b0 = vec4( x.xy, y.xy );

vec4 b1 = vec4( x.zw, y.zw );

//vec4 s0 = vec4(lessThan(b0,0.0))*2.0 - 1.0;

//vec4 s1 = vec4(lessThan(b1,0.0))*2.0 - 1.0;

vec4 s0 = floor(b0)*2.0 + 1.0;

vec4 s1 = floor(b1)*2.0 + 1.0;

vec4 sh = -step(h, vec4(0.0));

vec4 a0 = b0.xzyw + s0.xzyw*sh.xxyy ;

vec4 a1 = b1.xzyw + s1.xzyw*sh.zzww ;

vec3 p0 = vec3(a0.xy,h.x);

vec3 p1 = vec3(a0.zw,h.y);

vec3 p2 = vec3(a1.xy,h.z);

vec3 p3 = vec3(a1.zw,h.w);

//Normalise gradients

vec4 norm = taylorInvSqrt(vec4(dot(p0,p0), dot(p1,p1), dot(p2, p2), dot(p3,p3)));

p0 *= norm.x;

p1 *= norm.y;

p2 *= norm.z;

p3 *= norm.w;

// Mix final noise value

vec4 m = max(0.6 - vec4(dot(x0,x0), dot(x1,x1), dot(x2,x2), dot(x3,x3)), 0.0);

m = m * m;

return 42.0 * dot( m*m, vec4( dot(p0,x0), dot(p1,x1),

dot(p2,x2), dot(p3,x3) ) );

}

`

}

}

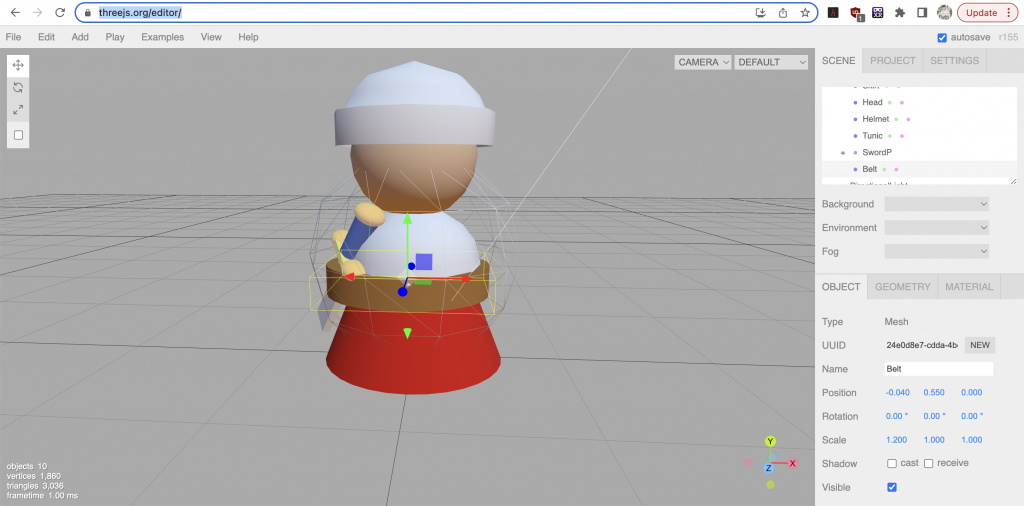

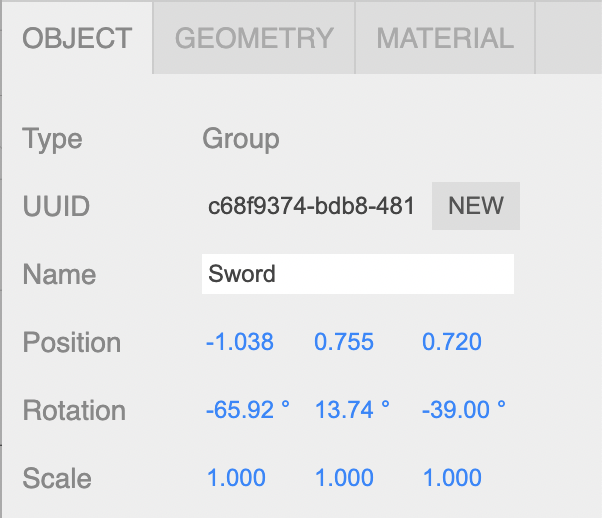

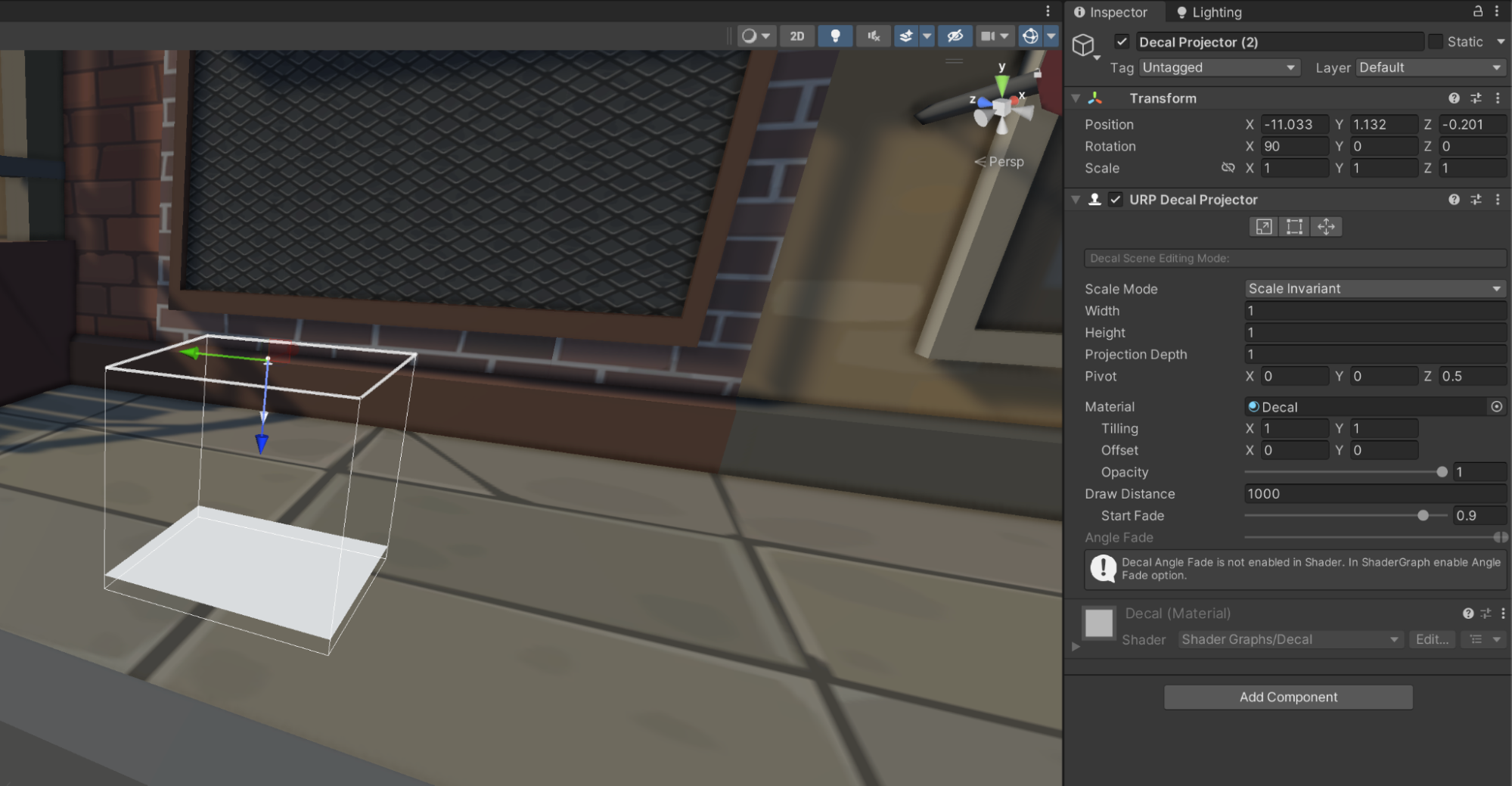

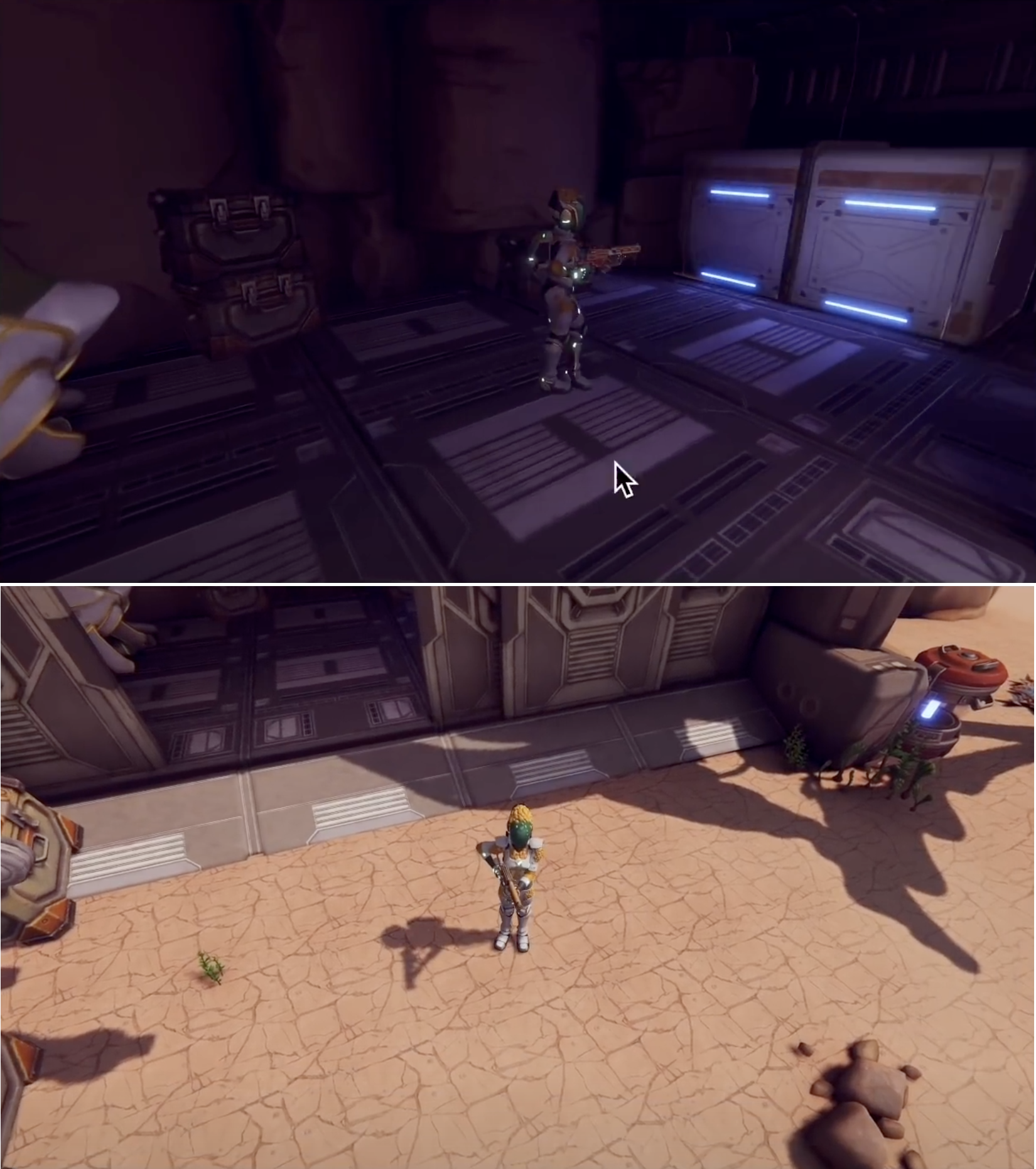

I created panelling, a gun and a ceiling with lights and air conditioning pipes.

When creating the panelling and the gun I made use of the ThreeJS Shape class and ExtrudeGeometry.

I did some play testing and the frame rate was suffering on my Quest2. I decided to swap the panelling for a texture. Which I created by using a WebGLRenderTarget.

const panelling = new Panelling( 1.5, 1.5, true );

panelling.position.set( 0.12, 1.34, -1 );

this.scene.add( panelling );

const renderTarget = new THREE.WebGLRenderTarget(128, 128, {

generateMipmaps: true,

wrapS: THREE.RepeatWrapping,

wrapT: THREE.RepeatWrapping,

minFilter: THREE.LinearMipmapLinearFilter

});

this.camera.aspect = 1.2;

this.camera.updateProjectionMatrix();

this.renderer.setRenderTarget( renderTarget );

this.renderer.render( this.scene, this.camera );

const map = renderTarget.texture;

map.repeat.set( 30, 6 );

this.panels[0].panelling.material.map = map;

this.panels[1].panelling.material.map = map;

this.scene.remove( panelling );With some play testing I adjusted the bullet speed to be slow enough that balls to the left or right were quite difficult to hit. Then I added varying ways for the balls move. Starting just straight and ending up going up and down on a swivel. I also added a leaderboard and styled the various panels using css.

I submitted the game.

Having submitted the game I started work on a ThreeJS Path Editor. The paths I used to create complex shapes using ExtrudeGeometry involved drawing on graph paper there had to be a better way.

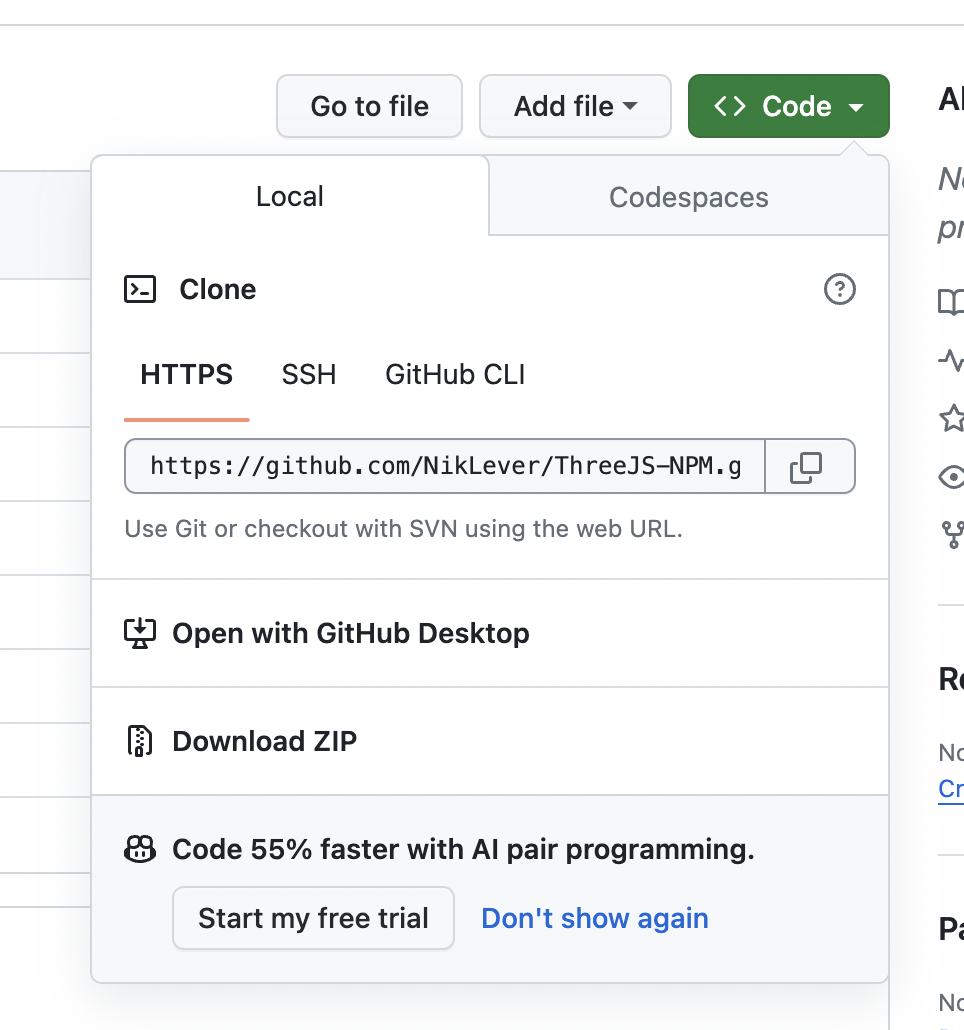

It’s all ready for next years competition. Source code here.

Nik Lever September 2024